[GNU Manual] [No POSIX requirement] [Linux man] [FreeBSD man]

Summary

shred - split a file into context-determined pieces

Lines of code: 1312

Principal syscall: write()

Support syscalls: sync(), stat(), fcntl(), ioctl()

Options: 17 (7 short, 10 long)

This utility originated with GNU Fileutils

Added to Fileutils in January 1999 [First version]

Number of revisions: 216

The shred utility replaces file contents with data patterns interleaved through the whims of the GNU implementation of the ISAAC PRN generator. This paper provides the basis for the design.

Helpers:clear_random_data()- Clears random data buffers on utility exitdirect_mode()- Changes direct mode for a file descriptor via fnctl()dopass()- Performs a single pass to scrub the file data and sync to mediadorewind()- Tries to rewind a file descriptor via lseek() or ioctl() for tapesdosync()- Pushes data to the file system for update viado_wipefd()- The core shred function, which calls many passes (default 3)fillpattern()- Creates a fixed pattern at least 3 bytes longgenpattern()- Schedules passes in a random orderignorable_sync_errno()- Tests if fsync() or fdatasync can be ignoredincname()- Increments the characters in a name based on the nameset arrayknown()- Verifies that a size is know (not negative or zero)passname()- Generates a pass name stringperiodic_pattern()- Tests that there is some entropy in the first 3 nibbles of inputwipefd()- The shred procedure for a file descriptorwipefile()- The main shred procedure for a single file namewipename()- Obliterates a file name

die()- Exit with mandatory non-zero error and message to stderrerror()- Outputs error message to standard error with possible process termination

Setup

The shred utility defines a global structure, struct Options used to hold all of the utility options set by the user during parsing

An important global array, patterns[], holds the possible bit patterns used t overwrite file data

main() adds a few more locals before starting parsing:

c- The next option character to process**file- The list of files to shredflags- The user options structurei- The iterator over the files to shredn_files- The number of files to shredok- The final return statusrandom_source- The source of random data to write to files

Parsing

Parsing breaks down the user-provided options to answer these questions about handling input data:

- Should shred be forced by overriding permissions?

- How many shred passes should occur?

- Which random source should be used?

- Should only the first few bytes of the target be shredded?

- Should the data be written with zeros instead of random data?

Parsing failures

These failure cases are explicitly checked:

- No shred target provided

- Nonsensical number of passes

- Specifying multiple random sources

- Unknown option used

User specified parsing failures result in a short error message followed by the usage instructions. Access related parsing errors die with an error message.

Execution

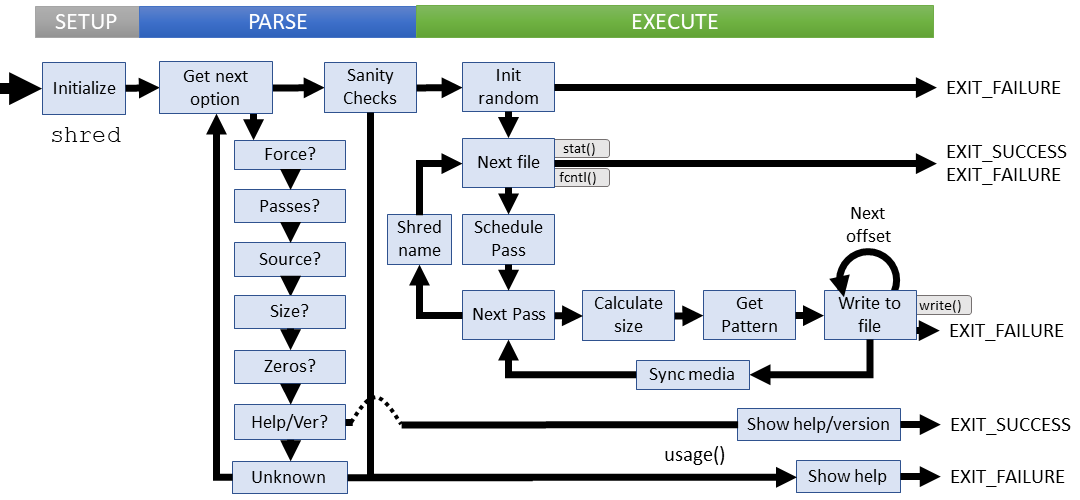

shred is surprisingly self-contained within coreutils, probably since the ISAAC implementation isn't widely used among other GNU packages. The process within shred is easy to follow even if you don't grok the secure overwrite process. It goes like this:

- Initialize the random source (used to select pattern schedule)

- Get the next file (verify, open)

- Generate/schedule the pattern sequence

- Prepare for passes over the file - default 3 (changed from 25 in 2009):

- Calculate the block size

- Get the next block pattern

- Write the block increment offset within file

- Repeat write until all blocks written complete

- Sync changes to media

- If the file is to be deleted, obliterate the file name

- Get the next file until all work is complete

Failure cases:

- Failure to initialize the random source

- Unlink fails

- Unable to stat() or fnctl() file

- Unable to open or close files

- Unable to write to file at any point

- File appears to have a negative size

- The file is too large

- Unable to seek or rewind (ioctl)

All failures at this stage output an error message to STDERR and return without displaying usage help

Extra comments

Source of random

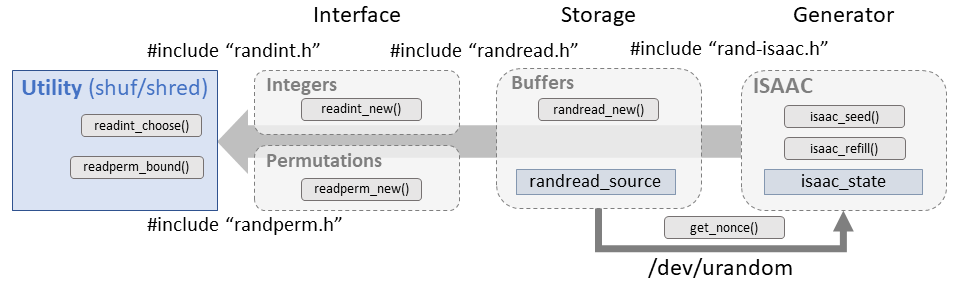

Coreutils includes a self-contained implementation of the ISAAC cipher used in both the shuf and shred utilities. There's a fair amount of plumbing between the entropy source and the utility needed to generate, store, and distribute the numbers. This allows a minimal framework to bring random numbers in to this (and future) utilities. Consider the following diagram:

Usage warning

There are operational assumptions built in to the design that should be noted:

First, the method was designed around magnetic storage as noted in the paper.

The general concept behind an overwriting scheme is to flip each magnetic domain on the disk back and forth as much as possible (this is the basic idea behind degaussing) without writing the same pattern twice in a row.

Second, the utility instructions note that shred may be ineffective for certain (re: modern) filesystems:

CAUTION: Note that shred relies on a very important assumption: that the file system overwrites data in place. This is the traditional way to do things, but many modern file system designs do not satisfy this assumption. The following are examples of file systems on which shred is not effective, or is not guaranteed to be effective in all file system modes: * log-structured or journaled file systems, such as those supplied with AIX and Solaris (and JFS, ReiserFS, XFS, Ext3, etc.) * file systems that write redundant data and carry on even if some writes fail, such as RAID-based file systems * file systems that make snapshots, such as Network Appliance's NFS server * file systems that cache in temporary locations, such as NFS version 3 clients * compressed file systems

The rise of SSDs and error resistant file systems may be pushing shred closer to retirement. The bottom line is to know your situation and your purpose before employing tools like shred.

Your mileage may vary